Press

Overview

The purpose of this paper is not the reproduction of a particular appearance, or the modelling of a specific material.

The purpose of this paper is to enable sampling, that is backward light transport, of arbitrary wave-optical distributions of light in complex, real-life scenes.

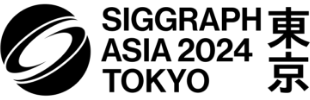

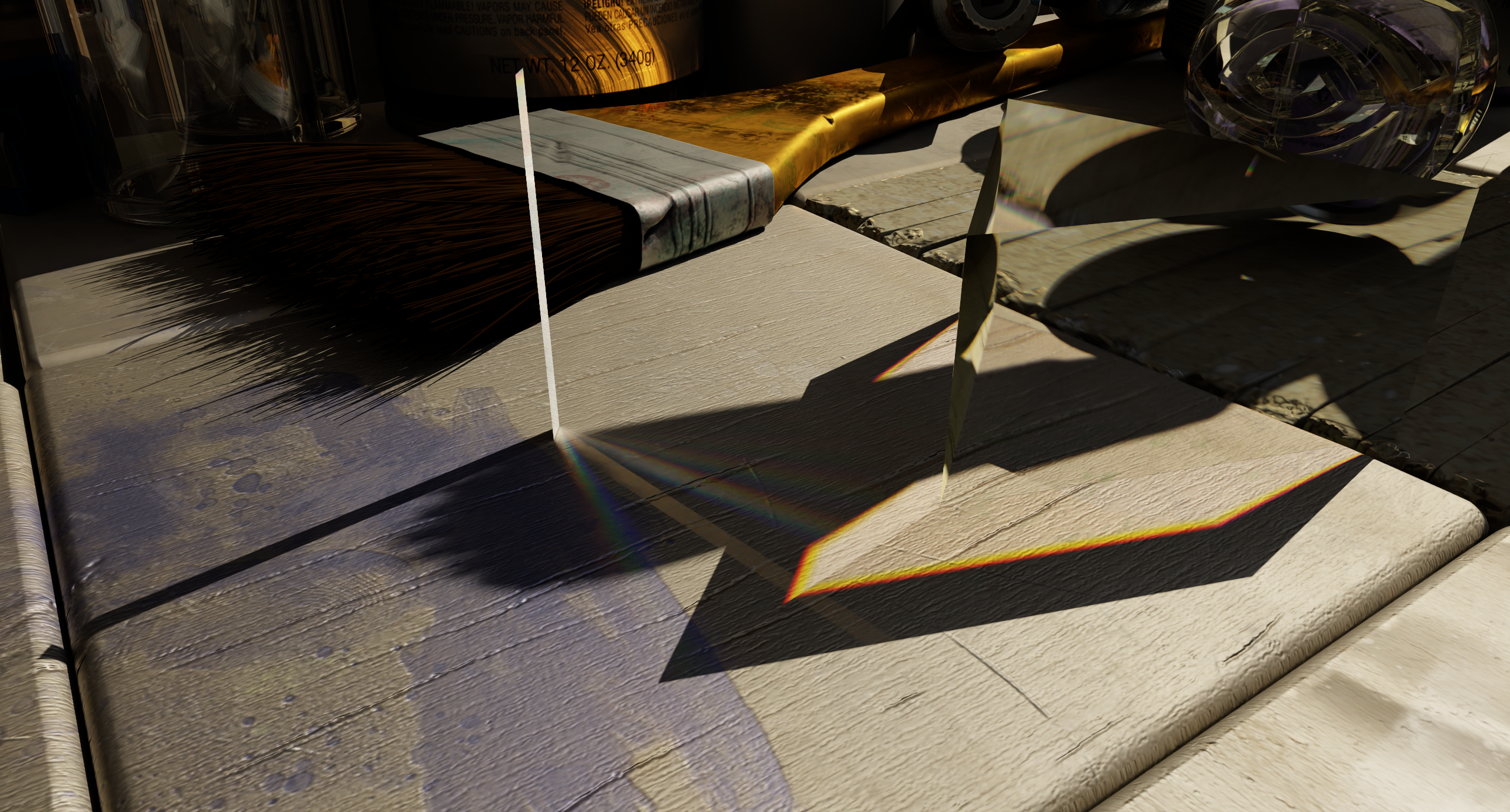

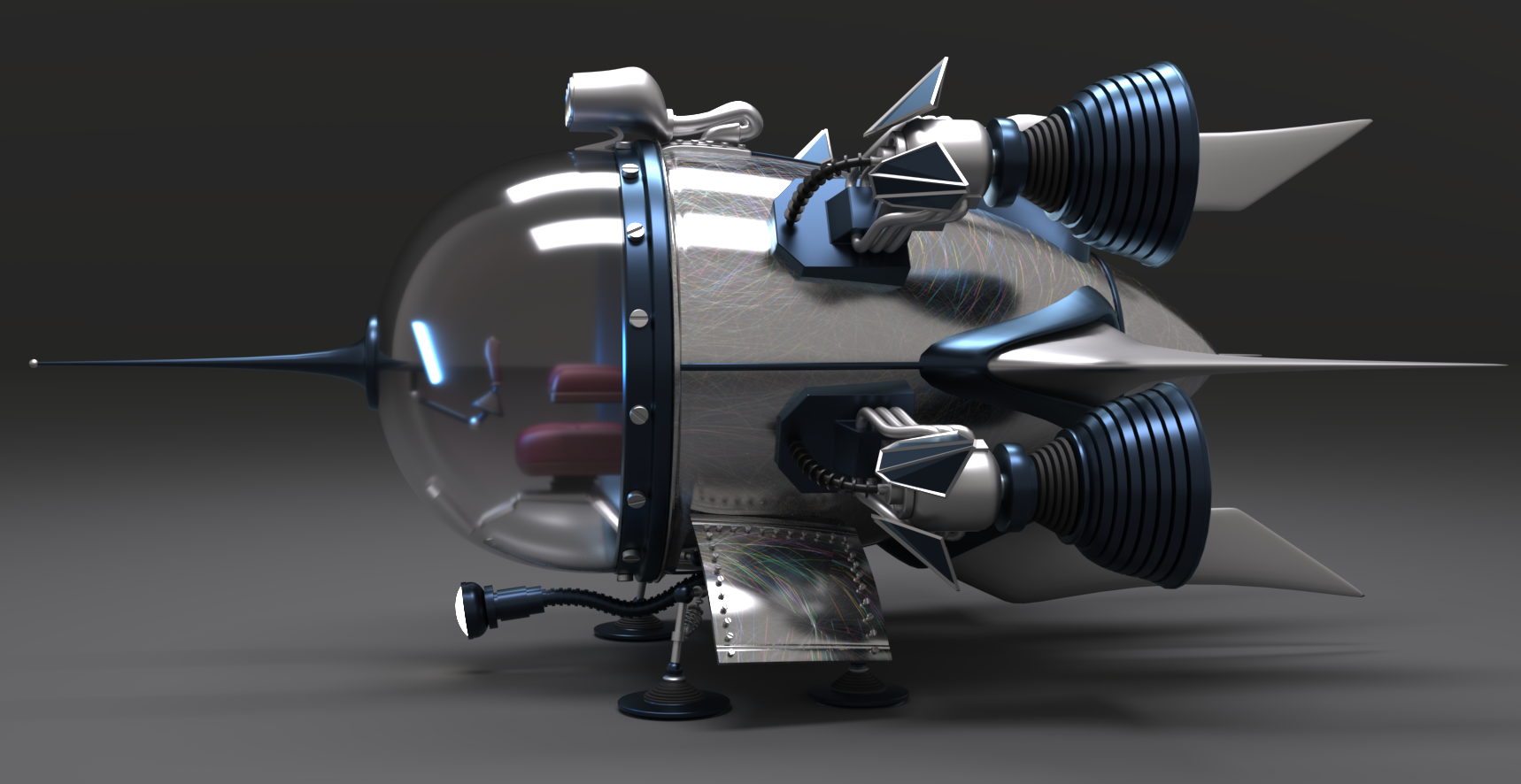

The rendered images and materials in this work may not appear photorealistic, for example the iridescent Rainbow Boa’s scales are modelled as a simple two-dimensional diffraction grating, not entirely faithful to the real-world material.

Better modelling of a material can be done, but is not the point.

What these rendering do demonstrate is our ability to reproduce general wave-interference and diffraction effects, accurately, when doing backwards light transport (from the eye or camera), and applying a variety of sophisticated rendering and path tracing tools.

This was not achieved by previous work.

In other words, the core contribution and motivation for this work is enabling the application of a much wider range of rendering and light transport tools to wave optics, and not so much the reproduction of some appearance or particular visual effect.

Wave Optics: Locality and linearity

Simulating the behaviour of light under rigorous wave optics, in complex scenes, is often difficult, because wave optics admit no clear linearity or locality.

- Linearity is lost because waves interfere: when “adding up” light packets, they might annihilate each other (destructive interference) or add up to an intensity that exceeds their sum (constructive interference), anyhow the superposition is not linear.

- Locality is lost as wave optics is a global process: there is, in general, no clear way to decompose light into local, linear packets that propagate into a narrow set of directions.

It is not trivial to “cut” waves into smaller waves.

Classical rendering techniques rely on a linear rendering equation to (Monte-Carlo) integrate; they also require propagating local packets of energy (for example, a ray) in order to: (i) make use of spatial-subdivision accelerating structures, crucial to making path tracing an O(logn) algorithm instead of linear; and, (ii) simplify the formulation of light-matter interaction, which now happen at a localized point, and not over a region containing multiple, mutually-interfering scatterers.

If you forgo linearity and locality, you need to consider the mutual interference of the entire scene with itself, for example via highly-impractical wave solving.

Previous work that targets wave-optical rendering, for example A Generic Framework for PLT and Towards Practical Physical-Optics Rendering, regains a degree of locality and linearity by propagating forward partially-coherent light.

Because such a decomposition depends on the wave properties of light, these properties need to be known in order to propagate light and evaluate its interaction with matter.

This can be quite limiting in the kind of rendering tools we may use.

While these previous work are able, at least in theory, to render any scene that you see in this paper, when the light transport gets complex, as in the snake enclosure scene, previous work will require a practically-impossible number of samples and runtime.

To achieve a local and linear description of light we take a novel approach:

Instead of dealing with the light field directly and finding means to decompose it into local and linear packets, this work considers the eigenstates of photoelectric detection (i.e., our generalized rays).

This change of physics—from quantifying light to quantifying its observable artefacts—is what enables an accurate decomposition into simple, mutually-incoherent (i.e. linear) constructs, which are used to sample and do backward light transport, thereby simulate (the observable response of) wave optics in complex scenes.

Unlike previous work, this decomposition is exact.

Fast Forward

Renders